There are always some discussions about how Database Replay will work when there are additional data sources included. Mostly this problem occurs when there are database links involved.

The idea with Database Replay is to validate what a change will do to my database. A change can be everything from small changes like new values in init.ora parameters to new versions, new hardware or even workload consolidation.

One thing to have in mind is that when we do a capture we are capturing activities in one specific database and the only statistics that we collect are for this database. If the database calls other databases then it is actually out of the scope for this test since the change is for this database only. If we change something in another database then that change should be validated with SPA and Database Replay in the other databases and not this.

OK, but what if we do workload consolidation? Same theory is still applicable since a call over the database link then is captured in that database and would be duplicated during a consolidated replay.

One best practice that we have is to isolate the test database if it has external references that point to any production resource. If not then there can be problems that have impacts on the business.

A while back a team used Database Replay when upgrading a local police force database. The capture was done Friday eve since Friday eve is peak for this system. The replay was done the week after, during normal office hours. While the replay ran the team saw that the police force came to an alert and there were several police cars that went out on emergency calls. After a while they found out that it was actually the replay system that caused those emergency calls. The system was not isolated so Friday eve emergency calls were actually sent out again to the force.

Lesson learned, before next replay they terminated all references to external sources.

But now back to one of our problems: Database links. How will this impact a database replay if they are still active?

This actually depends on how they are implemented. Here are three different implementations.

- SQL statements with Bind variables

- SQL statements with literals

- SQL statements executed within a procedure

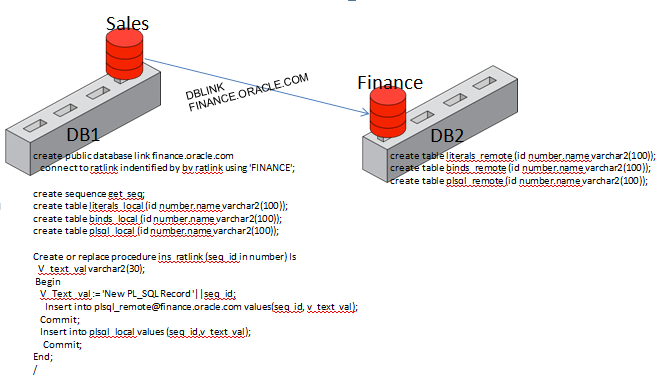

My configuration is two PDB’s in two separate databases. Schema ratlink in both PDB’s where sales have a database link to finance PDB

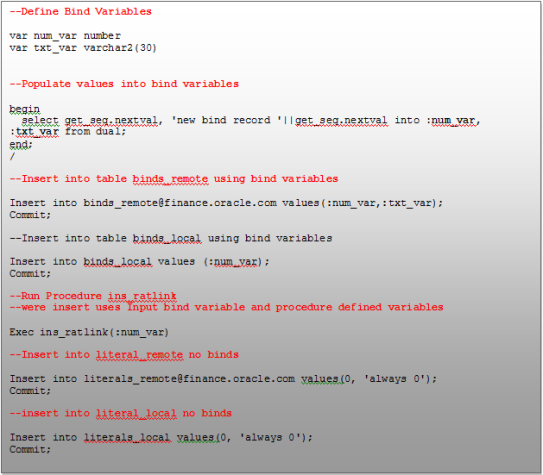

While capturing the workload I’m executing the following test from SQL Plus connected as user ratlink in PDB sales:

The result is following:

Let’s restore the database and replay the workload. Since it is a very simple test then it is just to delete all rows from these tables.

When the replay has finished we have a new result in our tables and the content is this:

So we can see that there is no row in the remote bind table but all other tables have rows. This means that we can insert data over the database link.

But the binds_remote table is not populated, Why is that?

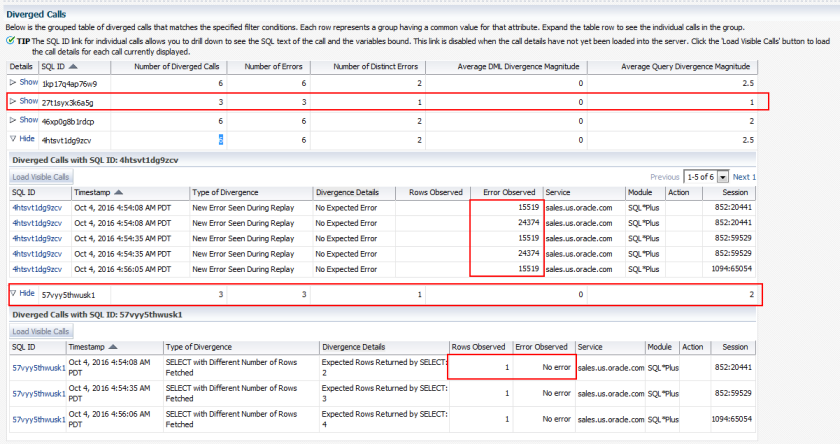

Let’s have a look if we had any errors during the replay.

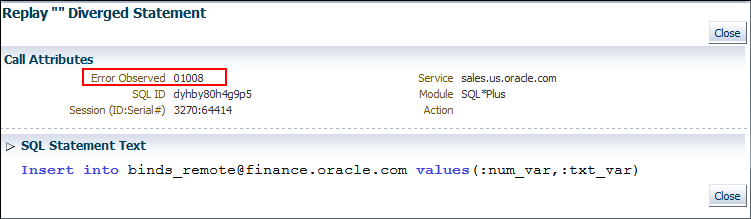

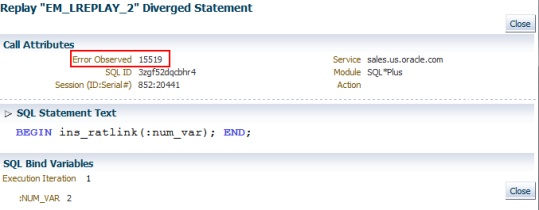

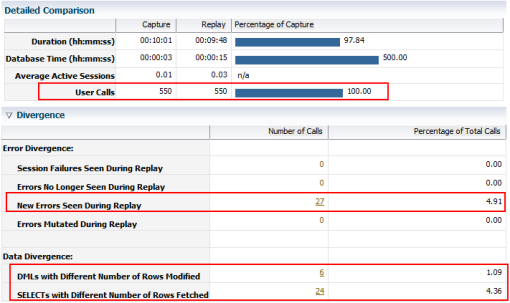

Yes we have. Just to make it more visible I took the liberty to use EM to show the error.

As we can see the insert into binds_remote have failed with ORA-01008 :Not all variables bound.

So the problem is actually not the database link itself, it is bind variables.

But we did an insert into the plsql_remote using bind variables as well but this table is populated, why is that?

A: When we inserted into table binds_remote we tried to use the bind variables but unfortunate we do not have those available and this is because we can not capture outgoing bind variables (we are investigating a solution for this).

But why does it work inside a PL/SQL procedure?

So the conclusion is that if we have calls using a database link then it will be executed if it doesn’t include binds variables or if it is included in a PL/SQL procedure

As a DBA our responsibility is that the database by it self doesn’t have connection to production system and we have control of the database so that’s where we can do restrictions. Here are some methods that we can use to handle database links:

- Point the link to a different database: Database activity that uses literals or access data inside a Pl/SQL procedure will proceed as they did during capture but activities using bind variables will fail with ORA-01008: “Not all binds bound”

- Point the link to cyberspace: No activities over the link will work. Direct SQL statements will fail with ORA-12154: “TNS: could not resolve the connection identifier specified”, ORA-12545: “Connection failed because target host or object does not exist” or ORA-01008: “Not all variables bound”. PL/SQL calls will fail with ORA-12154 or ORA-12545 and will either fail or do activities based on the exception handler inside the PL/SQL

- Use the advanced replay parameter called (DISABLE_DB_LINKS): This parameter will cause access to database links to hit ORA-15519: “cannot access database link “string” because it is disabled”. Be aware that it will not work on calls made from sessions not controlled by Database Replay.

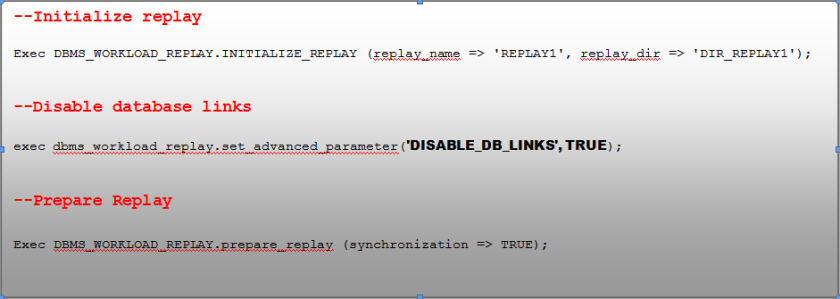

Below is an example of how to use the parameter and errors to expect:

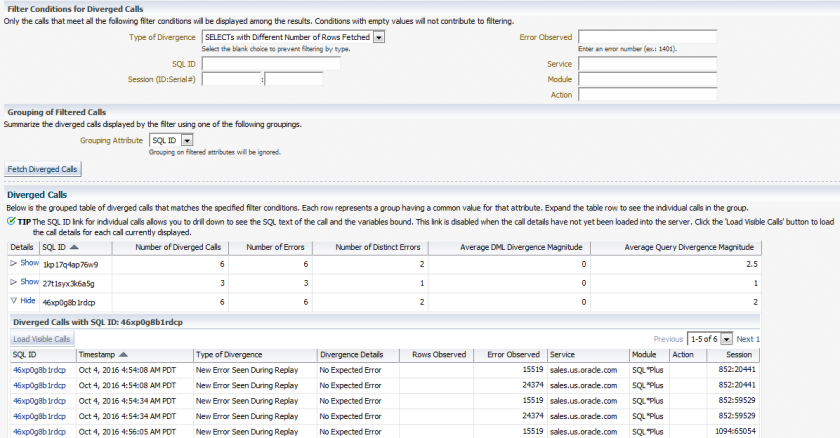

As in previous replay I have chosen to show show the replay result from Enterprise manager since it provides a better visibility.

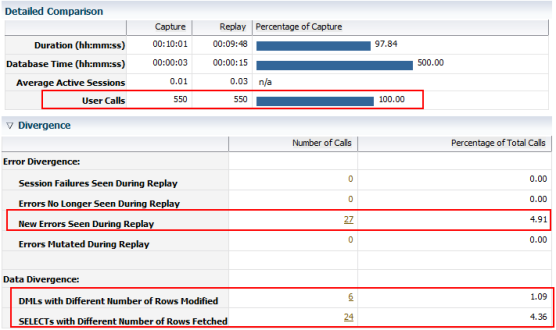

As always we start to figure out how representative this replay is compared to the production workload

- User calls: We have tried to replay all calls so this is good

- Session Failure: No database sessions have been terminated during replay

- New Errors: We had 27 calls that threw an error during replay but not during capture this is 4.9% of all calls. This needs to be investigated.

- DMLs with Different….: 6 calls 1.09% this should also be investigated just to figure out how it affects the workload

- Select with Different….: 24 calls 4.36% This needs to be investigated

In total we have 10.36% divergence and now it is up to you to decide if this is good or bad. I would say it is a rather good replay. Why?

We have actually been able to replay 89.64% off all calls.

If you know any other tool with the ability to replicate your production workload to the same extent then let me know.

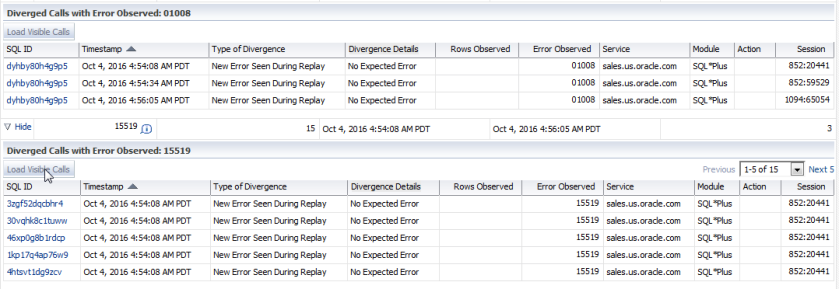

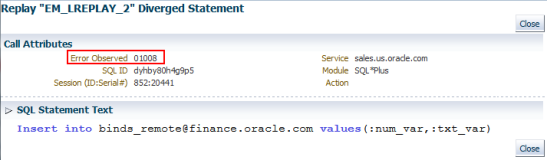

We can now drill down on individual error types and find the reason why they have occurred. and to make it more easy we can also group errors on errors observed, SQL_ID etc so we can see if one error is dependent on another.

Here I can see that my direct SQL with binds have failed with ORA-01008: Not all variables bound, this was expected. and other remote SQL statements have failed with ORA-15519: cannot access database link “string” because it is disabled. Also expected. Also seen but not shown is that any select statement getting ORA-15519 will return “ORA-24374: define not done before fetch” as well so the amount of failed calls are actually not 10.36% is even less.

The SQL_ID doesn’t provide you with so much information so you have the ability to see the statement if you click on the statement.

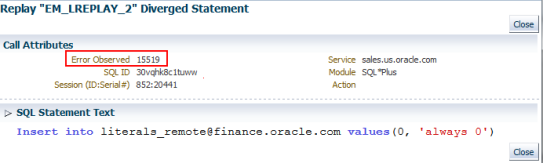

So here we can see that my direct call with literals and the PL/SQL call fails with ORA-15519 and that my call with binds fails with ORA-01008

OK but we had both SQL and DML with different number of rows. So lets analyze this result as well.

I have selected the divergence report for these statements and grouped them on SQL_ID and the result is quit interesting.

Same SQL_ID as in New Errors and they are showing Same error as we saw in previous example.

So the conclusion is that if an SQL statement or DML receives an error then it automatically reports less rows fetched.

Unfortunate this is a double reporting of error.

Lets see on the divergence overview to figure out the real divergence.

New errors Seen: I have 3 unique statements executed 3 times each reporting both ORA-15519 and ORA-24374 which will reduce the amount of errors by 9. We are down to 18 unique errors.

All divergence in “DML with Different rows…” has been reported as New errors seen.

We have now just our select statements to analyze and we can find that there are only 2 statements that have not been reported in New Errors Seen and they have been executed 3 times each

So the total divergence is actually down to 24 statements 4.36%, not bad at all. We have succeeded to execute 95.74% of the total workload.